I was writing a post about corporate governance in startups when this happened:

People in tech have universally condemned Sam Altman’s firing.

The reaction by VCs and the business community has been apoplectic. He’s a superstar who built the world’s most important startup. What was the board thinking?

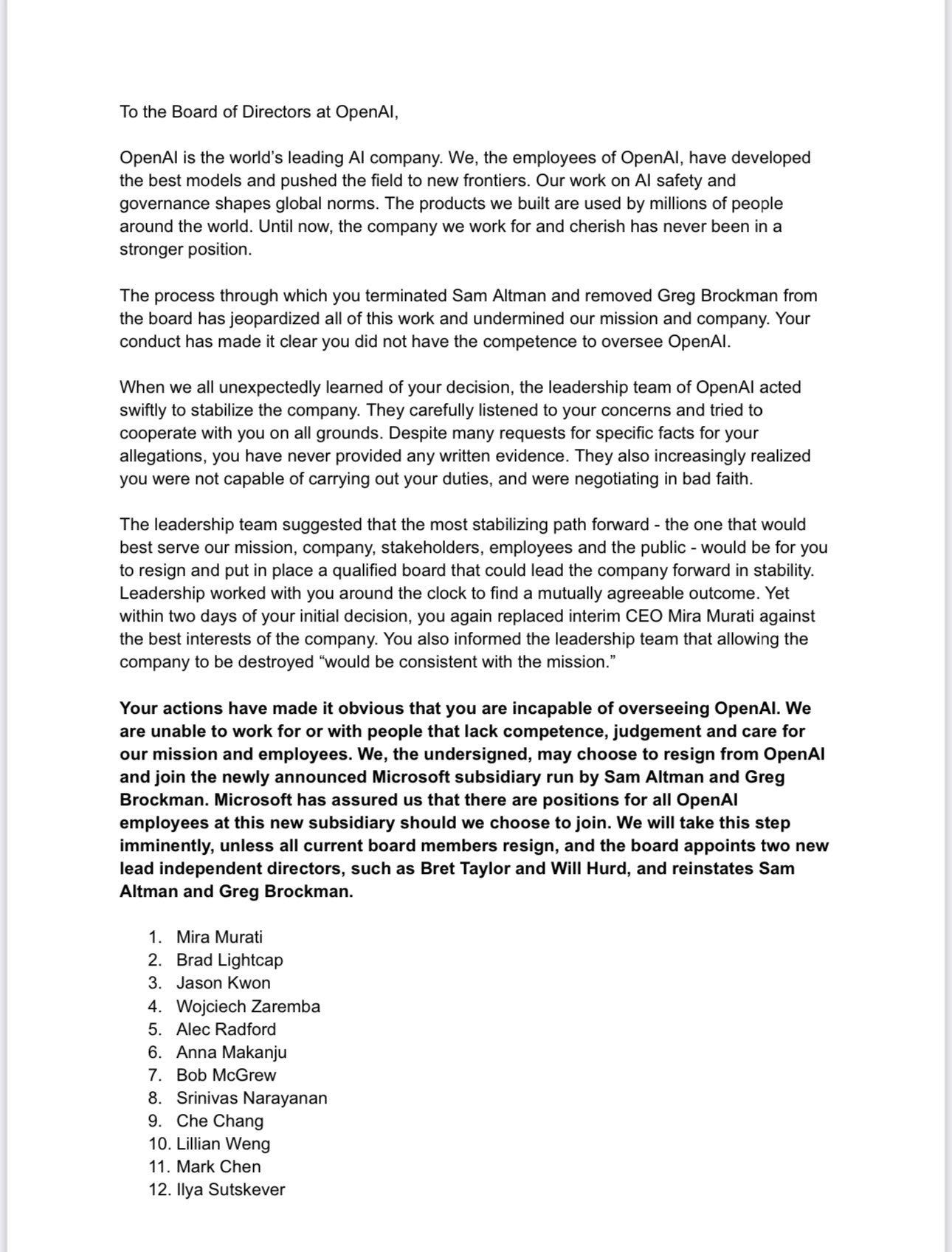

The majority of OpenAI employees have threatened to resign unless Altman is reinstated:

Founders have spoken up: boards are bad and you’d better watch out or they’ll come for you too!

Everyone is blaming the board. To most, it’s obvious they messed up. They’re “inexperienced” and “not qualified.”

I have a more provocative question.

Under what circumstances would the board be 100% right?

Thanks for reading my newsletter! Subscribe for free to receive new posts and support my work.

An intentional governance structure

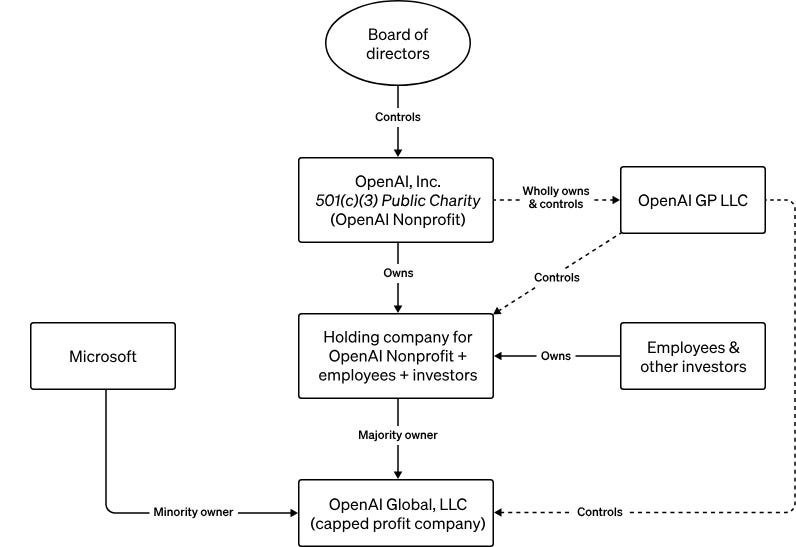

In case you didn’t know, this is OpenAI’s structure:

It’s not a typical structure and may not even be the best structure for OpenAI. But it was intentionally structured this way.

The company that makes ChatGPT, OpenAI Global LLC, does not have its own board of directors. Directors sit on the board of OpenAI Inc., the public charity. No investors, not even Microsoft, have a board seat.

The board is also majority independent. That’s not an accident. I guarantee you that your board is not majority independent.

If you follow the lines marked “control” in the org chart you’ll see that this entire structure was designed to fulfill the parent company’s mission around AI. Even profit, the ultimate long-term driver of a startup’s equity, is capped.

Independence by design

So we have a structure designed to allow a majority independent board to act independently of the profit entity.

That says one thing: we value that independence as a failsafe, i.e. against artificial general intelligence being a threat to humanity.

This grinds against the “go fast, break things” mantra of tech leaders.

But not all tech leaders.

In March 2023 many signed an open letter calling for a 6-month pause on AI development (which did not happen). One of those signatories was Elon Musk, co-founder of OpenAI.

A hypothetical defence of the OpenAI board

Only a handful of people know why Sam Altman was fired. But rather than speculate, let’s examine how they’re being criticized.

You can’t fire a CEO who has delivered such incredible results.

The OpenAI charter does not mention profit or shareholder value. It says “our primary fiduciary duty is to humanity.” The success of OpenAI as a company is not the main measure of the CEO’s performance.

If all the employees quit that proves the board made a mistake.

It will definitely be hard for OpenAI to achieve its mission without people. But if the board felt the CEO was acting against the charter, their duty would be to ignore the risk of a mass exodus of staff.

They didn’t consult investors like Microsoft who had invested billions.

A heads up would have been nice but the org chart is clear. No investor has a board seat or a vote on governance matters like firing the CEO. It will be difficult for investors to sue the board when the charter explicitly makes profit and shareholder value subservient to the mission.

They are slowing down the progress of AI development just when it is taking off.

This is true. But the mission is “to ensure that artificial general intelligence (AGI)—by which we mean highly autonomous systems that outperform humans at most economically valuable work—benefits all of humanity.” There’s no mention of speed being a benefit and many will believe the exact opposite.

I think it’s more than possible the board did not go rogue or act out of sheer stupidity. Instead, they felt a line was crossed that caused them to go with the nuclear option of firing the CEO.

Stakeholders vs governance

What’s happening with OpenAI is a conflict between an historically-successful company and its original social mission. The loudest voices right now are those that can’t believe such a successful startup is being gutted by bad decisions.

But corporate governance is not just about the majority exercising their control (though the tech industry has a strong libertarian impulse that is just that).

OpenAI may have an unusual structure but it’s not unique in serving multiple stakeholders. All companies do that when they balance shareholder value with the needs of employees, customers, the environment, and society.

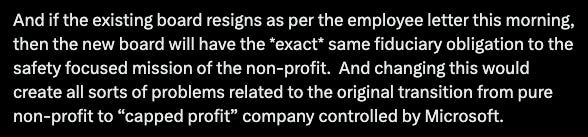

Here’s an interesting thought from Gavin Baker:

Whatever has happened between the board and Sam Altman is just a prelude to a much bigger fight over OpenAI’s mission and governance.